Cloud network management isn’t optional anymore. Most businesses run workloads across multiple cloud providers, and without proper management, costs spiral, security gaps appear, and performance suffers.

At Clouddle, we’ve seen firsthand how the right approach transforms cloud operations. This guide walks you through the fundamentals, best practices, and real solutions to the challenges holding your business back.

What Cloud Network Management Actually Means

Understanding the Core Work

Cloud network management represents the ongoing work of monitoring, controlling, and optimizing how data moves across your cloud infrastructure. It’s not a one-time setup-it’s a continuous process that touches everything from traffic routing to security enforcement to cost tracking. Most businesses treat it as an afterthought, which is why they end up paying for unused resources, experiencing unexpected downtime, and struggling with security vulnerabilities.

Cloud networks operate dynamically. Resources scale up and down, workloads shift between regions, and traffic patterns change constantly. Without proper management, you lose visibility into what’s running, where data flows, and whether your infrastructure performs efficiently.

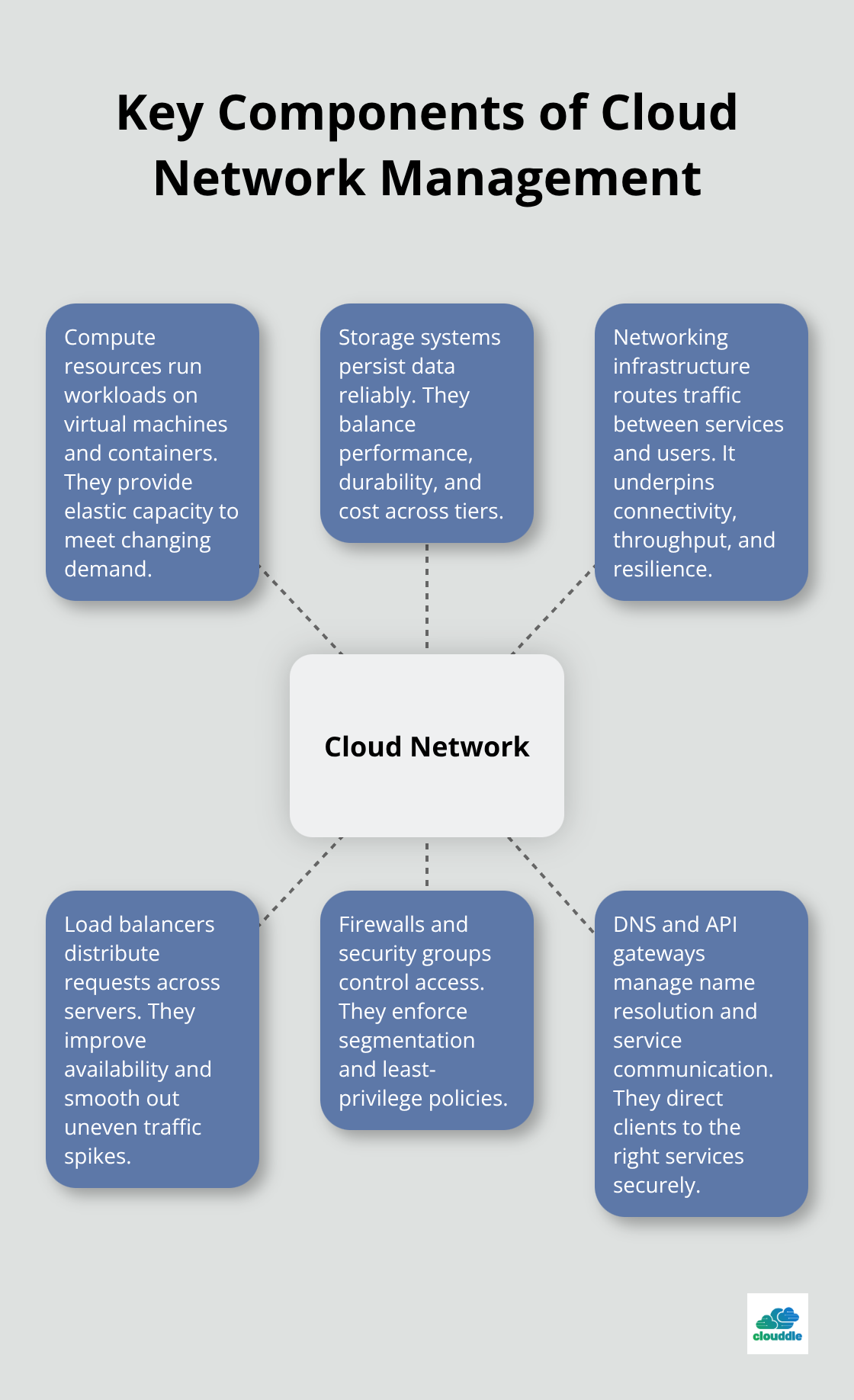

The Components That Require Management

Your cloud network consists of interconnected components that work together: compute resources like virtual machines and containers, storage systems for data persistence, networking infrastructure that routes traffic, and the virtualization layer that ties everything together. Modern cloud networks also include load balancers that distribute traffic across servers, firewalls and security groups that control access, DNS services that resolve domain names, and API gateways that manage service communication.

Each component generates data about performance, security, and usage. The problem is that most businesses don’t systematically collect or analyze this data. They react to problems after they happen rather than preventing them.

Why Visibility Transforms Operations

Proper management means you collect metrics on bandwidth utilization, latency, packet loss, and error rates across all network segments. You also track security events, access patterns, and configuration changes. This visibility lets you spot bottlenecks before they cause outages, identify security threats before they escalate, and optimize resource allocation before costs become unmanageable.

Businesses that skip dedicated network management typically face three consequences: uncontrolled cloud spending, inconsistent application performance, and difficulty meeting compliance requirements. Organizations without proper network oversight often see their monthly cloud bills increase 30 to 50 percent year-over-year, largely because they run unused resources and inefficient configurations. Performance suffers because traffic isn’t optimized, latency isn’t minimized, and failover mechanisms don’t work smoothly. Compliance becomes a nightmare because you can’t prove who accessed what, when systems changed, or whether data remained encrypted in transit.

The Path Forward

Dedicated management solves these problems. It means assigning responsibility for network operations, implementing automated monitoring and response systems, and building processes around configuration management and change control. The investment pays for itself through reduced cloud costs, fewer performance incidents, faster incident response times, and confidence that you meet regulatory requirements.

These fundamentals set the stage for what comes next: the specific practices that separate high-performing cloud operations from struggling ones.

How to Actually Monitor and Optimize Cloud Networks

Collect Data and Act on It Systematically

Real cloud network optimization starts with data collection, not guesswork. Most teams collect metrics but fail to act on them systematically. AWS CloudWatch, Azure Monitor, and Google Cloud Operations generate visibility into bandwidth utilization, latency, packet loss, and error rates across your infrastructure. The critical step happens next: you establish baselines for normal performance, then set alerts when metrics deviate. A 50-millisecond increase in latency might seem minor until you realize it costs your e-commerce platform 1 to 2 percent in conversion rate losses. Datadog APM and New Relic track end-user experience directly, showing which application components actually impact customers.

Network bottlenecks typically appear in three places: between your application and database layers where unoptimized queries create traffic spikes, at load balancers when traffic distribution becomes uneven, and between regions when data moves across geographic boundaries. The fix requires action, not observation. If your load balancers show uneven traffic distribution, reconfigure them to use least-connections algorithms instead of round-robin. If inter-region traffic causes latency, implement caching strategies and CDNs like CloudFlare or Akamai to reduce data movement.

Most teams waste time collecting metrics they never review. Set up automated dashboards that surface the three to five metrics that actually matter for your business: API response time, database query latency, and bandwidth costs. Review these weekly. This prevents the common mistake of drowning in data while missing obvious problems.

Enforce Security Controls Continuously

Security protocols in cloud networks fail when teams treat them as one-time implementations rather than continuous processes. Role-Based Access Control and multi-factor authentication represent the foundation, but most businesses stop there. Network segmentation requires separating your infrastructure into zones based on function and sensitivity, then enforcing firewall rules between zones. A database zone should have stricter access rules than an application zone.

Kubernetes network policies defined in YAML control traffic between pods and services, preventing compromised containers from spreading laterally through your cluster. Calico and Cilium plugins enforce these policies at scale. Encryption must happen in two places: data in transit using TLS termination at ingress controllers, and data at rest using provider-managed keys or customer-managed keys depending on compliance requirements.

Automated patch management keeps your infrastructure current without manual intervention. AWS Systems Manager Patch Manager, Azure Update Management, and similar tools reduce the window where vulnerabilities exist. The honest truth: most security breaches in cloud environments succeed because of configuration mistakes, not sophisticated attacks. Default deny-all policies for network traffic prevent unintended access. Egress filtering stops data exfiltration by restricting outbound traffic to only necessary destinations.

Scale Infrastructure with Security Integrated

When you scale infrastructure, security often becomes an afterthought. It shouldn’t. Scale with security controls already integrated. Infrastructure as Code tools like AWS CloudFormation and Azure Bicep let you template secure configurations and deploy them consistently. Auto-scaling policies should trigger based on actual demand metrics, not guesses.

If your CPU utilization stays below 40 percent consistently, you’re over-provisioned and wasting money. Right-sizing instances based on historical usage data cuts cloud spending significantly. Reserved instances and spot instances offer cost reductions of 30 to 70 percent compared to on-demand pricing when used strategically. The mistake most teams make: they scale vertically by upgrading instance sizes instead of scaling horizontally by adding more smaller instances. Horizontal scaling distributes load better and provides resilience when individual instances fail.

Monitor your scaling events weekly to confirm they trigger correctly and adjust thresholds based on actual business patterns rather than theoretical peaks. These optimization practices form the foundation for handling the complex challenges that emerge as your cloud infrastructure grows across multiple providers and regions.

Real Solutions for Multi-Cloud Complexity

Standardize Naming and Governance Across Providers

Managing infrastructure across AWS, Azure, and Google Cloud simultaneously creates operational friction that most teams underestimate. The problem isn’t technical incompatibility-it’s the absence of unified governance and visibility. Each cloud provider offers different naming conventions, tagging structures, and security frameworks. AWS uses security groups while Azure uses network security groups; Google Cloud uses firewall rules. Without standardized naming and tagging conventions across all three platforms, your team wastes hours searching for resources, tracking costs becomes nearly impossible, and security audits turn into nightmares.

Implement a single tagging strategy before you deploy anything: use consistent keys like environment, owner, cost-center, and application across every resource in every cloud. Infrastructure as Code tools like Terraform work across all three providers simultaneously, letting you define infrastructure once and deploy identically everywhere. Store your infrastructure definitions in version control, review changes before deployment, and maintain a single source of truth rather than managing three separate console interfaces.

Normalize Costs Across Different Billing Models

Cost tracking fails in multi-cloud setups because each provider calculates charges differently-AWS charges per second, Azure per minute, Google Cloud per second with a one-minute minimum. Use a cloud cost management platform like CloudHealth or Apptio to normalize these differences and show actual spend across providers in a single dashboard. Without this, your finance team receives three separate bills that don’t align, your engineering team has no incentive to optimize, and you overspend by 25 to 40 percent simply from poor visibility.

Reduce Latency Through Strategic Data Placement

Latency and speed problems appear when data moves between cloud regions or providers unnecessarily. Most teams don’t measure inter-region latency until users complain about slow performance. Start measuring latency between your application servers, databases, and storage systems immediately-anything above 50 milliseconds between critical components signals a problem.

CDNs like Cloudflare and Akamai reduce latency by caching content closer to users, cutting response times from 200 milliseconds to under 50 milliseconds for static assets. For dynamic content, implement read replicas of your databases in regions where users access data most frequently, rather than forcing all queries through a single primary database thousands of miles away. Load balancing across servers distributes server workloads more efficiently, speeding up application performance and reducing latency. Use least-connections algorithms instead of round-robin distribution so load balancers send new requests to servers with the fewest active connections rather than rotating through servers sequentially.

Caching at the application layer eliminates redundant database queries-Redis or Memcached reduce database load by 60 to 80 percent when configured properly.

Right-Size Instances and Reserve Capacity Strategically

Controlling costs while maintaining quality requires relentless focus on resource utilization. Right-sizing instances cuts spending dramatically: if your production servers average 25 percent CPU utilization, you’re paying for three times the capacity you need. Analyze actual usage patterns over 30 days, then downsize to instances that run at 60 to 70 percent utilization under normal load.

Reserved instances cost 30 to 50 percent less than on-demand pricing for predictable workloads, while spot instances cost 70 to 90 percent less for non-critical or batch workloads that tolerate interruptions. The mistake most teams make is buying one-year reserved instances for everything, then upgrading hardware halfway through the contract. Instead, reserve only your baseline capacity-the minimum you always need-then use on-demand or spot for variable demand.

Implement Tiered Storage for Long-Term Savings

Tiered storage saves 40 to 60 percent on storage costs: keep hot data in fast storage, move warm data to slower but cheaper storage after 30 days, and archive cold data that nobody accesses to glacial storage after 90 days. Monitor your actual data access patterns for 60 days before implementing tiering so you base decisions on real behavior, not assumptions.

Final Thoughts

Cloud network management transforms from a technical burden into a competitive advantage when you stop treating it as an afterthought. The businesses winning in their markets aren’t the ones with the most expensive infrastructure-they’re the ones who see clearly into their networks, respond quickly to problems, and optimize relentlessly. Visibility into your infrastructure prevents surprises, security controls integrated into your operations stop breaches before they happen, and scaling based on actual demand rather than guesses keeps costs reasonable.

Real implementation starts small. Pick one metric that matters to your business-whether that’s API response time, database latency, or monthly cloud spend-and establish a baseline this week. Set up automated alerts so you know immediately when performance degrades, then audit your access controls and confirm that only necessary people can reach sensitive systems. Organizations that implement these practices typically see cloud spending stabilize within 60 days and incident response times drop by 50 percent.

Multi-cloud complexity doesn’t require perfect solutions-it requires consistent governance. Standardize your naming conventions, implement Infrastructure as Code across all providers, and use a unified cost management platform to track spending across AWS, Azure, and Google Cloud simultaneously. We at Clouddle help businesses implement these practices through Network as a Service solutions that combine networking, security, and support without requiring massive upfront investment.