IT downtime costs businesses an average of $5,600 per minute, according to Gartner research. Yet most organizations still lack a structured approach to IT problem management.

At Clouddle, we’ve seen firsthand how reactive IT teams struggle while proactive ones thrive. The difference lies in having clear processes, the right tools, and a commitment to prevention over firefighting.

What Really Breaks Your IT Infrastructure

Network Outages Cost More Than You Think

Network outages hit harder than most organizations expect. Significant downtime runs businesses $2 million for every hour operations are down. But the financial damage tells only part of the story. When your network goes down, your team cannot access customer data, your sales pipeline freezes, and your reputation takes a hit. In hospitality, multi-family, and senior living organizations, network reliability directly impacts guest satisfaction and operational continuity. A 30-minute outage in a hotel does not just lose revenue-it damages trust. Most organizations treat network failures as isolated incidents rather than symptoms of deeper infrastructure weaknesses. You patch the immediate problem, get systems back online, and move on. Then it happens again in three weeks, and you face the same crisis.

Security Breaches and the Hidden Operational Cost

Security breaches and data loss represent a different category of problem entirely. IBM’s 2024 data breach report found that the average cost of a data breach reached $4.88 million, with healthcare and financial services experiencing the highest costs. But the financial figure masks the operational chaos: your team spends weeks on forensics, notification, and remediation while your business grinds to a halt. The real damage extends beyond dollars-your organization loses credibility with customers and regulators.

Performance Degradation: The Invisible Productivity Killer

Performance degradation (when systems slow down rather than fail completely) is arguably worse because it remains invisible to executives. Users report sluggish applications, slow file transfers, and delayed database queries, but there is no outage to point to. Productivity drops by 30-40% according to Forrester research, yet the problem stays under the radar until it becomes critical. The core issue is that most organizations lack visibility into what actually happens in their infrastructure. You do not know if a problem stems from misconfigured network settings, undersized hardware, security vulnerabilities, or poor change management practices.

Why Root Cause Analysis Changes Everything

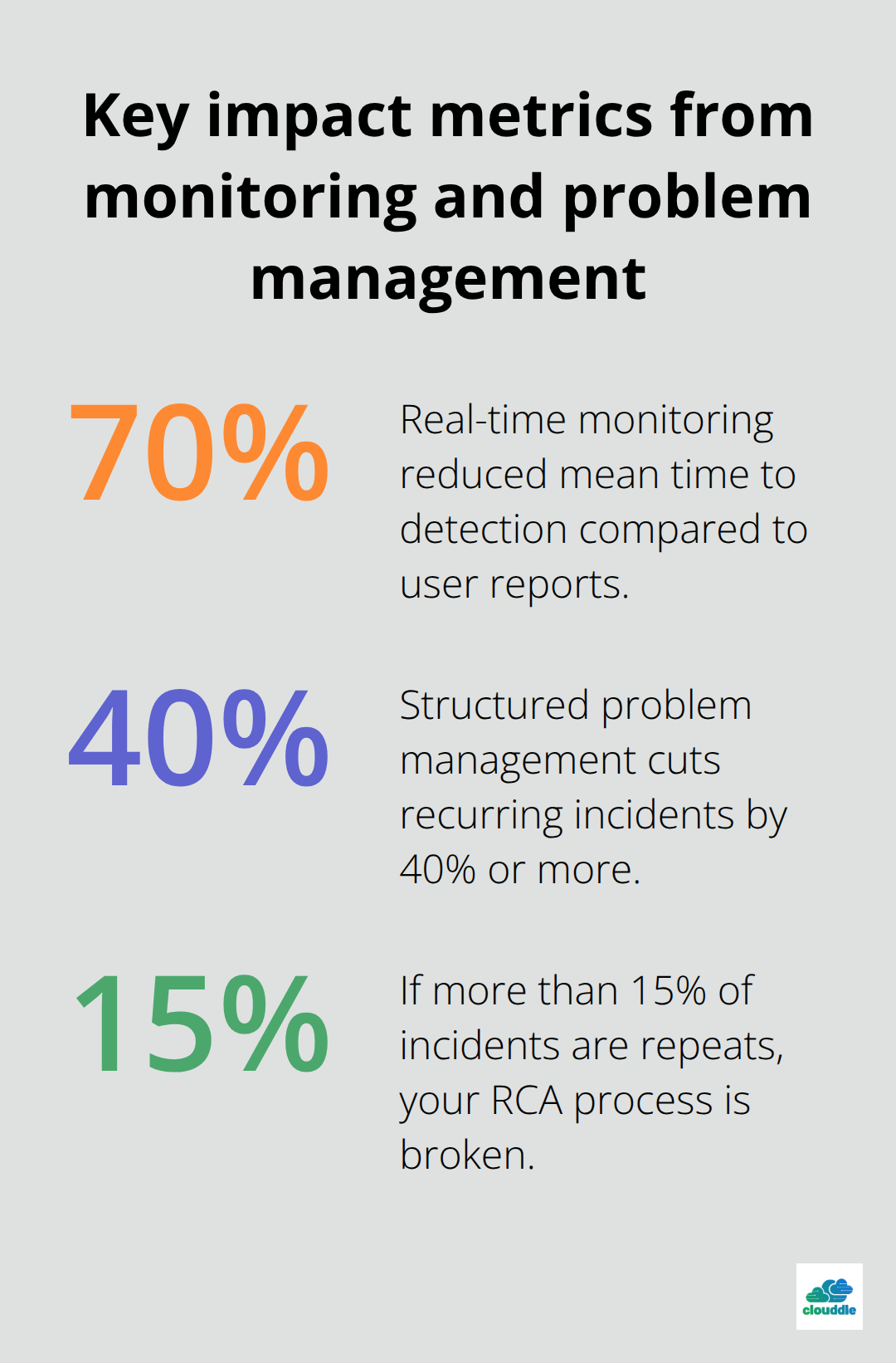

Without visibility, you cannot prevent the next incident. This is where structured problem management becomes non-negotiable. Instead of treating each incident as a standalone event, you need to capture root causes, document them in a Known Error Database, and use that knowledge to prevent recurrence. Organizations that implement this approach see recurring incidents drop by 40% or more. The investment in proper tooling and processes pays for itself within months through reduced downtime, fewer escalations, and happier users. The question is not whether you can afford to implement problem management-it is whether you can afford not to. The next section shows you exactly how to build this capability.

Building a Problem Management System That Actually Works

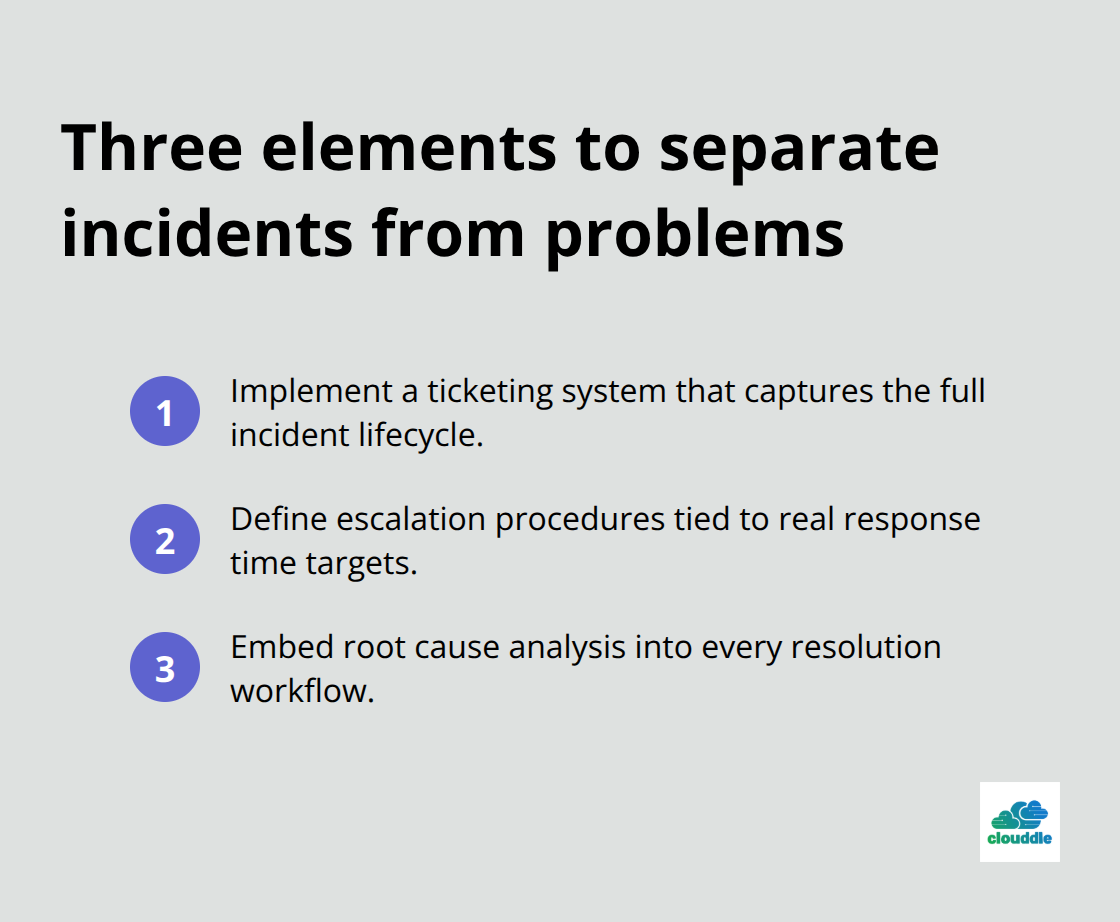

Separate Incidents from Problems to Track Patterns

The moment you stop treating incidents as one-off events and start connecting them to underlying problems, your IT stability transforms. This requires three concrete elements: a ticketing system that captures the full incident lifecycle, escalation procedures tied to actual response time targets, and root cause analysis embedded into your resolution workflow. Most organizations fail at this stage because they implement the tools without changing how their teams work.

A ticketing system sitting on top of reactive firefighting delivers almost no value.

You need the system to enforce a structured process where every ticket links to a problem record, every problem gets analyzed for root cause, and every root cause feeds into a Known Error Database that prevents the next occurrence. Start by separating incidents from problems in your ticketing system. An incident is the symptom-your network is down, users cannot access files. A problem is the underlying cause-a misconfigured switch, a firmware bug, or inadequate capacity planning. Your ticketing tool must enforce this distinction with dedicated problem records that live separately from incident tickets. When you merge them, you lose the ability to track patterns and prevent repeats.

Define Response Times Based on Business Impact

Set response time targets based on business impact, not arbitrary numbers. A network outage affecting your entire guest-facing system demands a 15-minute response from your senior network engineer. A single user’s email delay might warrant a 4-hour response. Assign clear ownership for each escalation level-your first-line support owns the initial triage within 30 minutes, your infrastructure team owns escalation within 1 hour, your vendor relationships own critical hardware failures within 2 hours.

Document these thresholds and make them visible to everyone. When escalation rules sit in someone’s head, they fail during pressure. Your team needs to know exactly when to escalate and to whom, without waiting for permission or guidance. This clarity accelerates response times and reduces the chaos that accompanies major incidents.

Use Root Cause Analysis to Break the Repeat Cycle

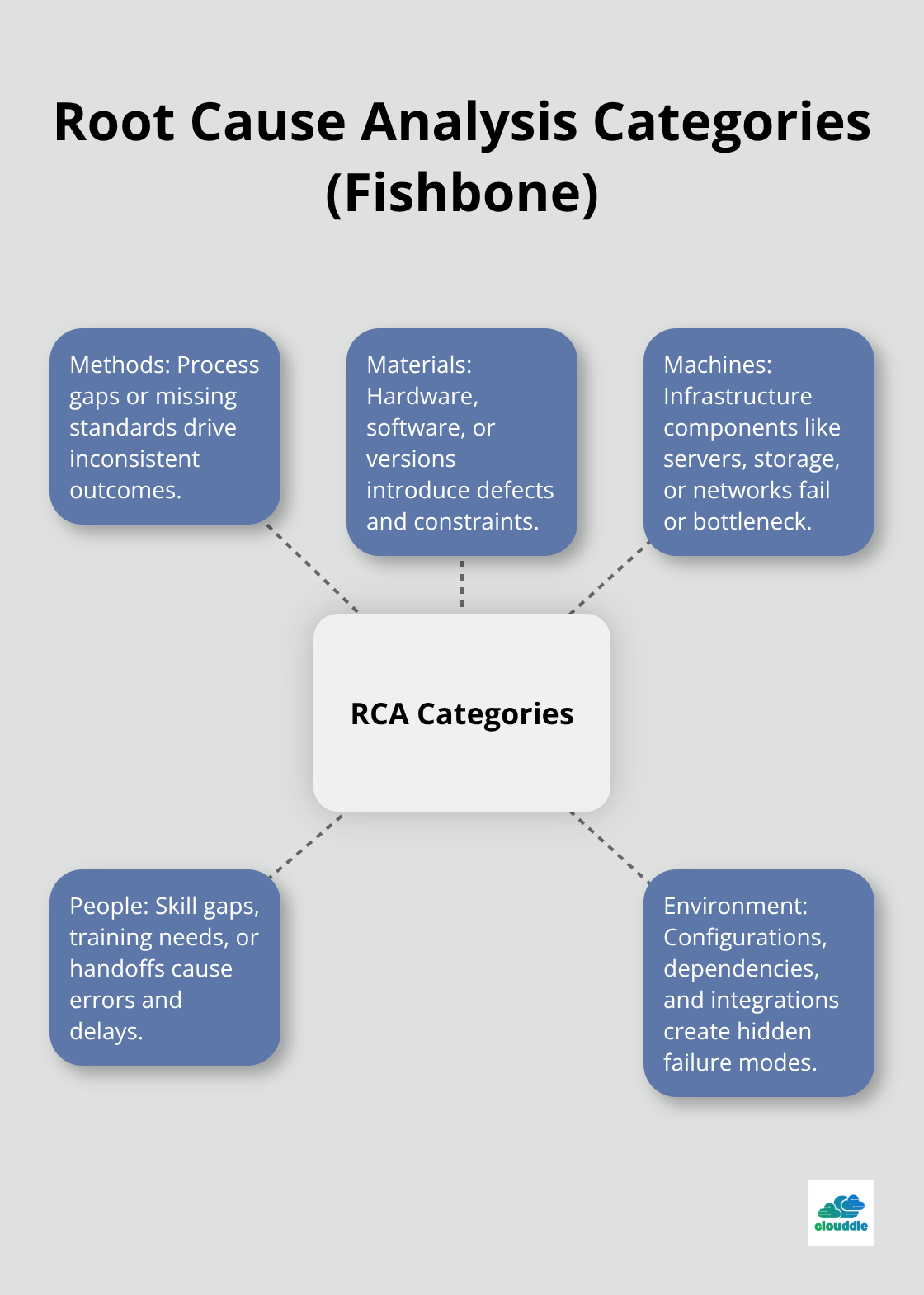

Root cause analysis separates organizations that fix problems from those that repeat them endlessly. The 5 Whys technique works for straightforward issues-your database locked up because a backup process consumed all disk space, which happened because no one monitored disk usage, which happened because monitoring was never configured. Three whys in, you’ve found the real problem and can implement a lasting fix.

For complex infrastructure failures, the Ishikawa Fishbone diagram forces your team to examine causes across five categories: methods (your processes), materials (hardware and software), machines (infrastructure components), people (skill gaps and training), and environment (configuration and dependencies). A financial institution reduced service disruptions by over 40% by integrating proactive problem management with a Known Error Database and cross-team collaboration.

Assign Ownership and Document Everything

Your problem manager-ideally a single person who owns the entire lifecycle-coordinates this analysis and ensures findings don’t disappear. Assign this role to someone senior enough to influence change and vendor negotiations. Use your ITSM tool to document every root cause analysis in a structured format: what broke, why it broke, what you did to fix it, and what you’ll do to prevent it.

This creates an institutional memory that survives staff turnover and accelerates resolution of similar issues. When your second-line team encounters a problem they’ve seen before, they pull the Known Error Record, apply the documented workaround, and resolve it in minutes instead of hours.

Measure What Matters to Drive Improvement

Track two metrics ruthlessly: how many incidents recur within 30 days, and how much time your team spends on repeat problems. If more than 15% of your incidents are repeats, your root cause analysis process is broken. These numbers reveal whether your problem management system actually prevents recurrence or simply creates paperwork. Once you establish this baseline, you’re ready to move beyond internal processes and examine the tools and expertise that accelerate problem resolution across your entire operation.

Tools That Actually Stop Problems Before They Happen

Real-Time Monitoring Detects Problems at Scale

Monitoring software sits at the intersection of prevention and detection. Organizations that invested in real-time monitoring reduced their mean time to detection by 70% compared to those relying on user reports. This matters because the faster you detect a problem, the smaller the blast radius.

A memory leak detected in your application server at 2% utilization can be fixed during a maintenance window. The same leak discovered when it hits 95% utilization crashes your entire environment at 3 AM.

Automated monitoring feeds your problem management process with data that reveals patterns before incidents occur. Tools like Prometheus paired with alerting rules catch anomalies in CPU usage, disk I/O, and network latency before users experience slowdowns. The key is not deploying monitoring everywhere but instrumenting the systems that directly impact your business.

Instrument Systems That Drive Revenue

For hospitality and senior living organizations, guest-facing network infrastructure and Wi-Fi performance demand continuous monitoring because downtime directly translates to lost revenue and damaged reputation. Your monitoring tool should integrate with your ITSM platform so alerts automatically create incidents and link to existing problems.

When your database monitoring detects a slow query, it should trigger an incident ticket, check your Known Error Database for similar issues, and suggest the documented workaround if one exists. This automation compresses resolution time from hours to minutes.

Leverage External Expertise for Complex Problems

Your problem management system cannot survive on tools and process alone. You need expertise available when your team hits a wall. Managed IT services provide 24/7 access to engineers who have seen thousands of infrastructure problems and know how to diagnose them systematically. The advantage is not just coverage during nights and weekends but the institutional knowledge that prevents your team from reinventing solutions.

When your senior living facility experiences a network connectivity issue affecting resident Wi-Fi, a managed services team applies proactive maintenance frameworks like the 5 Whys or Fishbone diagrams immediately rather than stumbling through troubleshooting. They document findings in a format your internal team can understand and reuse.

Build Internal Capability Through Structured Training

Staff training and certification programs close the gap between having tools and using them effectively. An engineer trained in ITIL problem management practices approaches incidents differently than one who has only received vendor product training. They understand the distinction between symptoms and root causes, they know how to document findings for future reference, and they recognize when to escalate versus when to dig deeper.

Organizations that invest in structured training programs see their problem resolution times drop by 25-35% within six months. The investment in certification programs like ITIL Foundation or vendor-specific problem management training pays for itself through faster incident resolution and fewer repeat problems. Your team learns not just what to do but why the process matters, which drives adoption and prevents shortcuts during high-pressure situations.

Combine Monitoring, Expertise, and Training

The combination of proactive monitoring, external expertise during critical incidents, and internal training creates a problem management capability that scales with your organization’s growth. Each element reinforces the others: monitoring provides the data, expertise applies proven frameworks, and training ensures your team can sustain the practice long-term.

Final Thoughts

IT problem management compounds over time as organizations separate incidents from problems, invest in root cause analysis, and combine monitoring with external expertise and staff training. The financial case justifies the investment immediately-downtime costs $5,600 per minute according to Gartner, so a two-hour network outage costs your organization $672,000 in lost productivity alone. Structured problem management reduces recurring incidents by 40% or more, which translates directly to fewer outages, shorter resolution times, and teams that focus on growth instead of crisis response.

Three concrete steps transform your infrastructure stability. Deploy monitoring that catches problems before users notice them, paired with a ticketing system that enforces the distinction between incidents and problems. Establish clear escalation procedures and response time targets so your team knows exactly when to escalate and to whom. Document root causes in a Known Error Database and apply that knowledge to prevent the next occurrence.

For hospitality, multi-family, and senior living organizations where network reliability directly impacts revenue and reputation, this approach matters enormously. We at Clouddle help organizations build this capability through managed IT services, proactive monitoring, and 24/7 support that applies proven problem management frameworks. Clouddle’s Network as a Service combines networking, security, and support in one bundled solution designed for organizations that cannot afford downtime.