Before you can fix a network delay, you have to find it. This seems obvious, but it’s a step I see people skip all the time. They jump straight to buying new hardware or calling their Internet Service Provider (ISP), when the real culprit might be something completely different.

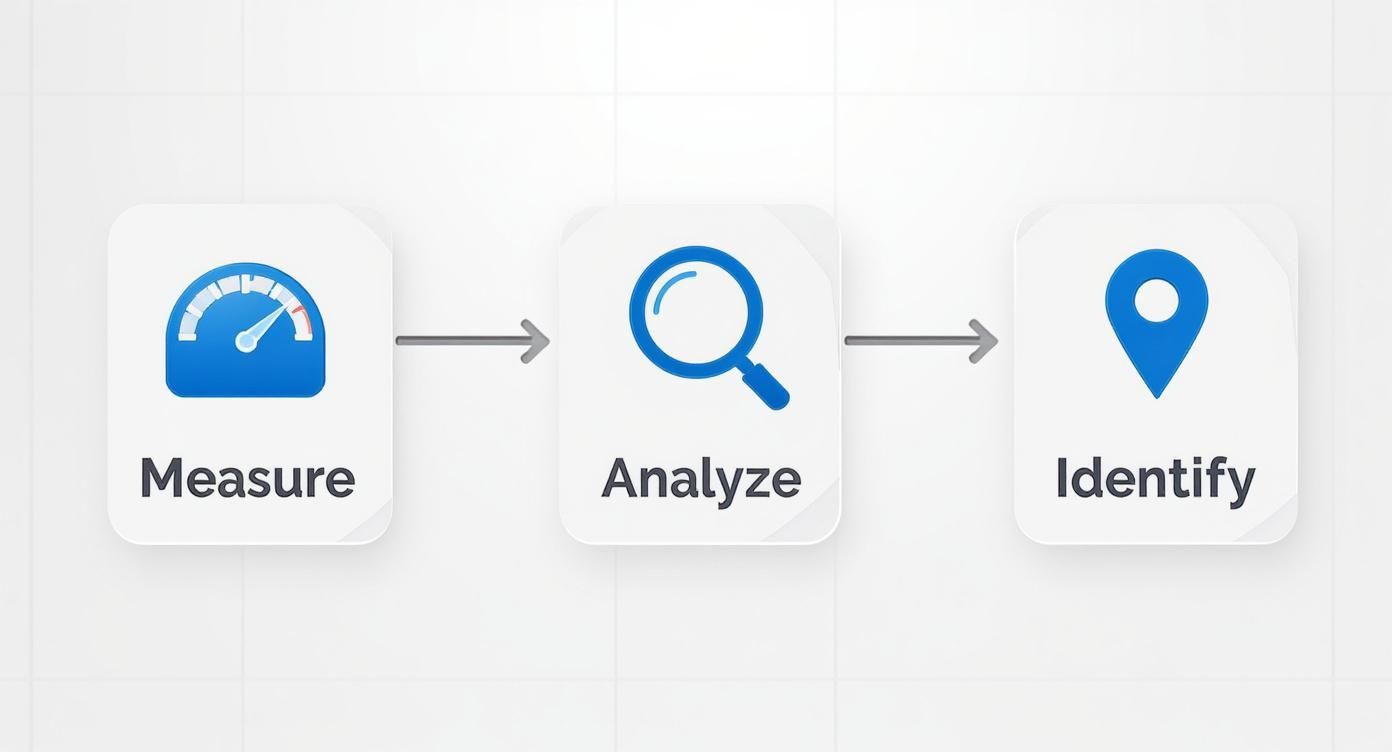

To effectively tackle network latency, you need a methodical approach: measure your current performance, analyze the data to find the bottlenecks, and then go after the root cause. This means looking at everything—from your Wi-Fi and physical cabling all the way out to your ISP connection—to figure out exactly where the delays are happening.

Pinpointing the Source of Network Latency

Network latency is the silent killer of user satisfaction. It’s that tiny delay—the time it takes for a data packet to get from point A to point B—that adds up to a frustrating experience. In places like hotels, senior living communities, or apartment buildings, a few extra milliseconds can be the difference between a seamless video call and a choppy, pixelated mess.

Trying to solve latency without a clear baseline is like navigating without a map. You can make changes, but you’ll have no real way of knowing if they’re actually helping. The first, non-negotiable step is to get a clear picture of your network’s current health.

Key Metrics That Actually Matter

When you start digging into network performance, it's easy to get lost in a sea of data. In my experience, focusing on just a few core metrics will give you the most actionable insights and tell you almost everything you need to know about the user experience.

- Ping (Latency): This is the classic one—the round-trip time for a packet to travel to a destination and back. A high ping is the most direct sign of a slow connection.

- Jitter: This measures the variation in your ping over time. High jitter means your latency is inconsistent, which is a nightmare for real-time applications like VoIP or online gaming. It's what causes that choppy audio or sudden lag spikes.

- Packet Loss: This is the percentage of data packets that get lost in transit and never arrive. Even a tiny amount of packet loss, like 1-2%, can be devastating, as it forces retransmissions and grinds things to a halt.

You have to be systematic. The diagnostic process always moves from measuring performance, to analyzing those results, and finally to identifying the specific bottleneck.

Think of this as the essential workflow for any latency investigation. You gather the raw data, make sense of it, and then pinpoint the exact cause of your network delays.

Distinguishing Internal vs. External Problems

Once you have your baseline numbers, the next challenge is figuring out where the problem is. Is it inside your building (on the Local Area Network, or LAN), or is the issue with your internet connection itself (the Wide Area Network, or WAN)?

This distinction is absolutely critical because it determines your entire troubleshooting strategy.

It's tempting to immediately blame the ISP for slow speeds. But in my experience, the bottleneck is often hiding right under your nose on the local network—think an overloaded Wi-Fi channel, a faulty ethernet cable, or an underpowered switch.

A simple way to differentiate is to run tests to a server inside your network and compare those results to tests run to a server on the internet. If your internal pings are lightning-fast but external pings are high, the problem is likely with your ISP connection. On the other hand, if even internal pings are sluggish, you know the problem is somewhere in your building.

For a deeper dive into the specific tools and techniques for this, you can learn more about how to measure network latency in our detailed guide. Getting this foundational knowledge right is crucial for any IT manager. By establishing a solid baseline, you set the stage for every fix and upgrade that follows, ensuring you can actually measure the success of your efforts.

Fine-Tuning Your Local Network for Quick Wins

Sometimes, the most significant improvements to network latency don't require any new hardware. The biggest gains are often hiding in plain sight, right inside the configuration settings of your existing routers and switches. These software-level tweaks can deliver immediate, tangible results, making them the perfect place to start for any IT manager looking for a fast victory.

Making these changes is about making your network smarter, not just faster. By telling your network which traffic matters most, you can ensure critical applications always get the green light, even during peak usage. This is where you can see real results without spending a dime.

Prioritize Traffic with Quality of Service

Imagine your network’s bandwidth is a multi-lane highway. Without any rules, every vehicle—from a delivery truck to an ambulance—is stuck in the same jam. Quality of Service (QoS) is your network’s traffic cop, creating an express lane for that ambulance to ensure it gets through, no matter what.

In a commercial environment like hospitality or senior living, QoS is non-negotiable for delivering a predictable, high-quality experience. You can set up rules that give preferential treatment to latency-sensitive applications that just can't wait.

Common QoS Priority Use Cases:

- High Priority: VoIP phone calls, video conferencing (Zoom, Teams), and essential cloud-based business software.

- Medium Priority: General web browsing and email.

- Low Priority: Large file downloads, background software updates, and video streaming that can afford to buffer.

For example, a senior living community can use QoS to guarantee telehealth video calls are always crystal clear, even if residents in other apartments are streaming movies. By prioritizing that telehealth traffic, you prevent the frustrating freezes and dropped connections that can disrupt a critical medical consultation.

Tweak Advanced Protocol Settings

Diving deeper into your network's plumbing can unlock some serious performance gains. Two key areas to look at are the Maximum Transmission Unit (MTU) size and TCP tuning. They might sound technical, but they directly control how efficiently data is packaged and sent across your network.

The MTU sets the largest size for a single data packet. If it’s too high, packets can get fragmented and reassembled, which adds latency. If it’s too low, you're sending more tiny packets than necessary, creating needless overhead. Finding that sweet spot for your specific network can make a small but meaningful difference.

Optimizing how applications and protocols are designed is another key factor. Efficient data serialization, such as moving from JSON to Protocol Buffers, can cut transmission and processing overhead. By adopting persistent connections to eliminate repeated handshakes, developers can reduce latency by up to 30% in some web applications.

TCP tuning involves adjusting the parameters that manage how data is transmitted and acknowledged. Tweaking settings like the TCP window size can help optimize data flow, especially over connections with high latency. It’s a delicate balance, but one that yields impressive results when you get it right. For a more complete look at improving your connection, this network setup and optimization guide is a great resource.

Isolate Traffic with VLANs

Another incredibly effective strategy is to segment your network using VLANs, or Virtual Local Area Networks. A VLAN logically carves up a single physical network into multiple, isolated digital islands. Think of it as creating separate, smaller networks that can't interfere with each other, even though they share the same hardware.

This separation is a powerful way to cut down on network "chatter" and congestion. On a flat network, every broadcast message gets sent to every single device, creating a storm of unnecessary traffic that can bog everything down.

Practical VLAN Segmentation for a Hotel:

- VLAN 10 (Guests): All guest Wi-Fi traffic lives here, isolated for security. This protects guests from each other and keeps their streaming habits from impacting hotel operations.

- VLAN 20 (Admin): Used for front desk computers, property management systems, and other internal staff devices. This network is secured and prioritized for business-critical functions.

- VLAN 30 (IoT Devices): Smart locks, thermostats, and security cameras get their own VLAN. This contains their traffic and bolsters security.

- VLAN 40 (VoIP Phones): By putting all VoIP phones on their own VLAN, it becomes incredibly simple to apply specific QoS rules and guarantee perfect call quality.

By implementing VLANs, a hotel or multi-family property can drastically reduce broadcast traffic, tighten security, and manage different data streams more effectively. This segmentation directly contributes to lower latency for everyone by ensuring one group's traffic doesn't slow down another's.

When software tweaks and configuration changes have taken you as far as they can go, it's time to look at the hardware. Making smart, strategic investments in your physical network infrastructure can deliver a massive leap in performance that no amount of software tuning can ever hope to match.

This isn't just about buying the latest and greatest tech. It's about building a rock-solid foundation that eliminates the physical limitations holding you back—the speed of data through a cable, the processing power of your core devices, and the efficiency of your network's design.

https://www.youtube.com/embed/tnzxrt6bgbs

Moving from Copper to Fiber Optics

For years, copper cabling was the lifeblood of our networks. But in a world awash with high-resolution video, cloud applications, and countless connected devices, copper is often the most significant bottleneck.

Upgrading your network’s backbone to fiber-optic cabling is one of the most powerful moves you can make to crush latency. Fiber uses light to transmit data, which is not only faster but also immune to the electromagnetic interference that can cripple copper wire performance.

The performance jump is dramatic. Studies have shown that moving to fiber can cut latency by up to 60% compared to older DSL connections. The real-world numbers are just as compelling: in 2023, the median idle latency for fiber networks was consistently below 25ms, while cable and DSL often struggled to stay under 50ms.

For a senior living community, this is more than a simple upgrade. The low, stable latency from a fiber network is what makes critical telehealth services possible, ensuring a clear, reliable video connection when a resident is speaking with their doctor. The return on investment here is measured in resident well-being and improved operational capacity.

Think of an investment in fiber as future-proofing your property. It provides the raw speed and low-latency performance needed to handle whatever comes next, from 4K security camera streams to complex IoT ecosystems.

When you're undertaking a major cabling project, getting the installation right is critical. Our guide on how to install data cabling for optimal network performance is a great resource to help you plan and execute properly.

Fiber optics stands out as the clear winner for low-latency performance, a crucial factor for real-time applications and a responsive user experience.

Comparing Latency Across Network Technologies

| Technology | Median Idle Latency | Key Latency Reduction Benefit |

|---|---|---|

| Fiber-to-the-Home (FTTH) | < 25 ms | Data travels at the speed of light with minimal signal degradation or interference. |

| Cable (DOCSIS 3.1+) | 25-50 ms | Modern standards have reduced latency, but shared medium can cause jitter. |

| DSL (VDSL2+) | 25-50 ms | Performance heavily depends on distance from the central office; closer is better. |

| Fixed Wireless Access (FWA) | 30-60 ms | Susceptible to line-of-sight issues and weather, which can increase latency. |

| Satellite (Starlink) | 30-60 ms | Low-Earth Orbit satellites offer a huge improvement over GEO, but still lag terrestrial. |

| Satellite (GEO) | 600+ ms | Extreme latency due to the vast distance signals must travel to and from orbit. |

While cable and DSL have improved, they can't compete with the fundamental physics that make fiber the superior choice for low-latency networking.

Eliminating Bottlenecks with Modern Switches and Routers

Your network's core switches and routers are its central traffic controllers. If they're old and underpowered, they create a massive internal traffic jam, no matter how fast your internet connection is. An aging switch with a slow backplane will start dropping packets and creating delays the moment traffic gets heavy.

Modern managed switches bring several latency-killing advantages to the table:

- Higher Throughput: They are built to handle far more simultaneous traffic without breaking a sweat, keeping data flowing smoothly across your LAN.

- Advanced QoS: Newer hardware gives you much more precise Quality of Service controls, so you can effectively prioritize time-sensitive traffic like VoIP or video calls.

- Non-Blocking Architecture: High-end switches operate at "wire speed," meaning they can handle traffic on all ports at their maximum rated speed without creating an internal bottleneck.

Upgrading these core devices ensures your internal network isn't the weak link. It lets you take full advantage of that new fiber backbone and high-speed internet connection. Of course, as you bring in new hardware, you need a plan for the old gear. It's worth understanding IT Asset Disposition to handle this process responsibly.

Intelligently Routing Traffic with SD-WAN

For any organization with multiple sites—think a property management company with buildings across town or a regional hotel chain—managing network traffic is a constant headache. Traditional WANs often force all traffic back to a central data center before it goes out to the internet. This practice, known as "traffic tromboning," is a latency nightmare.

This is where Software-Defined Wide Area Network (SD-WAN) changes the game.

SD-WAN is an intelligent technology that dynamically routes traffic over the most efficient path available, whether that's a dedicated fiber line, a business cable connection, or even a 5G wireless link.

For instance, if a user at a branch office needs to access a cloud app like Office 365, SD-WAN can send that traffic directly to the internet instead of backhauling it to headquarters first. This direct route can slash round-trip times. By constantly monitoring the health and latency of every available connection, SD-WAN ensures your most important applications always get the fastest, most reliable path possible.

Mastering Wi-Fi for Low-Latency Environments

In a world that runs on wireless, a poorly designed Wi-Fi network is easily one of the most common—and frustrating—sources of high latency. For commercial spaces like hotels or apartment buildings, where hundreds of devices are all fighting for airtime, a "set it and forget it" approach just won't fly. If you want to tackle Wi-Fi latency, you need a strategy that goes way beyond just plugging in more access points.

The real goal here is to create a clean, efficient, and intelligent wireless environment. It's about carefully managing the invisible radio frequency (RF) spectrum to cut down on interference and guide every device to the best possible connection.

The Foundation: Proper AP Placement

I can't overstate this: the physical location of your wireless access points (APs) is the single most important factor in Wi-Fi performance. Get it wrong, and you'll create coverage gaps (dead zones) and areas where signals from different APs overlap too much, causing what we call co-channel interference. This interference forces devices to constantly resend data, which is a direct killer of low latency.

This is why a professional wireless site survey isn't optional; it's essential. Using specialized tools, a survey maps out the RF environment in your building, pinpointing the ideal spots to mount APs for consistent coverage without creating a chaotic mess of conflicting signals.

For a hotel, this might mean placing APs in hallways to cover a block of rooms, but also being very careful about signal-blocking materials like concrete firewalls or elevator shafts. In an apartment complex, it's a delicate balance of ensuring every unit has a strong, clean signal without being blasted by interference from the neighbors' APs. Finding the best wireless access point for business is a great start, but where you put it makes all the difference.

Strategic Channel Planning

Think of Wi-Fi channels as lanes on a highway. If every AP in your building is trying to use the same two or three lanes, you've created a massive traffic jam. Strategic channel planning is the art of assigning each AP to a non-overlapping channel, essentially giving each one its own clear lane for communication.

Most environments are absolutely saturated on the 2.4 GHz band. It only has three truly non-overlapping channels (1, 6, and 11) and is notorious for interference from non-Wi-Fi devices like microwaves, cordless phones, and Bluetooth speakers.

The 5 GHz and 6 GHz (Wi-Fi 6E) bands are your best friends for fighting latency. They offer a huge number of non-overlapping channels, creating a much wider and clearer highway for your data.

A good channel plan involves staggering channels so that neighboring APs are never on the same or adjacent channels. While modern wireless management systems can automate much of this, I've found that a manual audit is often necessary to really dial in the performance, especially in dense environments.

Advanced Wireless Steering Techniques

Once you've nailed the physical layout and channel plan, you can bring in some smarter software features to actively manage how devices connect. These techniques act like traffic controllers, intelligently guiding clients to ensure they get the fastest, most stable connection available.

-

Band Steering: This feature actively encourages devices that can see both the 2.4 GHz and 5 GHz networks to connect to the cleaner, higher-performing 5 GHz or 6 GHz bands. It essentially makes the faster bands more attractive, steering clients away from the crowded 2.4 GHz mess.

-

Client Steering (or AP Steering): Ever notice your phone stubbornly clinging to a weak signal from a faraway AP, even when you're standing right next to a closer one? Client steering fixes that. The network can proactively nudge that device to connect to the AP with the strongest signal, ensuring a solid connection as people move around.

Imagine a guest walking from their hotel room down to the lobby pool. With client steering, their phone is seamlessly handed off from their room's AP to the lobby's AP. This prevents their connection from degrading, keeping their video call or music stream running smoothly. By putting these strategies into practice, you can transform a laggy, unpredictable wireless network into a reliable, low-latency asset that people can count on.

Shrinking Distance With Edge Computing and Caching

You can have the fastest fiber and a perfectly optimized Wi-Fi network, but you’ll always be at the mercy of one unchangeable factor: the speed of light. Physical distance is a hard limit on latency. The further data has to travel, the longer it takes. To truly crush latency, you have to find a way to shorten the trip.

The best way to do that is to bring your content and applications physically closer to the people who need them. It's a simple but powerful idea. Instead of forcing every user to pull data from a single, distant server, you serve it from a local machine just down the road. This is the core principle behind caching and edge computing.

Leverage Content Delivery Networks

One of the most effective tools in the arsenal is a Content Delivery Network (CDN). A CDN is essentially a global network of servers that stores copies (caches) of your static assets—images, videos, stylesheets, and code libraries—in numerous geographic locations.

When someone visits your website, the CDN intelligently routes their request to the nearest server, known as a Point of Presence (PoP). The data travels a few miles instead of halfway across the country. The difference is night and day. A well-implemented CDN can slash latency by 50% or more, especially for users far from your main server. The business impact is real; Amazon famously found that just 100ms of latency could cost them 1% in sales. As you can read in a post on M247 Global’s blog, speed is money.

Selecting and Implementing a CDN

Getting started with a CDN is surprisingly straightforward and usually just involves a simple DNS change. The real work is in choosing the right partner.

Here’s what I look for when evaluating a provider:

- Global Footprint: Where are their servers? A CDN is only useful if its PoPs are close to your key user bases. Check their network map.

- Performance: Don’t just take their word for it. Look for independent performance benchmarks and read real user reviews to gauge their speed and reliability.

- Ease of Use: A clean, intuitive dashboard is a must. You need to be able to easily configure caching rules, purge content when it's updated, and see performance metrics at a glance.

- Security Features: Modern CDNs often bundle in critical security services like DDoS mitigation and a Web Application Firewall (WAF), which is a huge bonus.

Imagine a multi-family property trying to lease apartments online. With a CDN, their high-resolution photos and virtual tour videos load instantly for potential renters, whether they're searching from across town or across the country.

Bringing Computation to the Edge

CDNs are fantastic for static content, but what about dynamic, data-intensive applications? That’s where edge computing comes in. It takes the "bring it closer" concept a step further by moving the actual application logic and data processing to the user's location.

Edge computing is about processing data right where it's generated. This completely eliminates the round-trip delay to the cloud, unlocking the ultra-low latency needed for applications where every millisecond counts.

Instead of sending raw data to a central cloud server for analysis, you can run the numbers on a local edge server right on your property. This is a total game-changer for any system that needs to react in real time.

Real-World Edge Computing Example

Let's look at an integrated security system in a large apartment complex. The system uses AI-powered cameras to detect unauthorized access in real time.

- Without Edge Computing: The camera streams video to a cloud server hundreds of miles away. The server analyzes the feed and, if it spots a problem, sends an alert back to the on-site security desk. That round trip could easily introduce a delay of several seconds.

- With Edge Computing: A small server on the property analyzes the camera feeds locally. It can identify a threat and trigger an instant alert to security personnel in under a second.

That near-instantaneous response is only possible because the long journey to the cloud was eliminated. For critical systems—from security and access control to building automation—edge computing is the ultimate weapon for minimizing network latency.

Answering Your Top Questions About Network Latency

When you start digging into network performance, the same questions tend to pop up time and time again. Let's tackle some of the most common ones that IT managers and property owners ask when they're trying to get a handle on latency.

Getting these fundamentals right can save you from chasing the wrong solutions and help you focus on what will actually make a difference for your users.

What Is a Good Latency for My Business?

This is the big one, and the honest answer is, "it depends." There's no magic number that works for everyone. The best way to think about it is to focus on what your most critical applications need to function well.

- VoIP and Video Conferencing: For a crisp, clear conversation without that awkward lag, you'll want to be under 75ms. Anything over 150ms starts to feel noticeably delayed.

- General Web Browsing: People are impatient. A latency of under 100ms to frequently visited servers will feel snappy. Once you get into the hundreds of milliseconds, users start to perceive a sluggish experience.

- Real-Time Systems (Security, IoT, Telehealth): This is where every millisecond counts. For things like AI-driven video monitoring or remote patient diagnostics, latency needs to be in the single digits (<10ms). This is where you absolutely need solutions like edge computing.

Instead of aiming for a generic target, identify the most latency-sensitive applications your business or residents rely on. Build your performance goals around their specific thresholds—that's how you deliver an experience that truly meets their needs.

Does More Bandwidth Mean Less Latency?

Not necessarily, and this is probably the most common misconception out there. It’s easy to think they’re the same thing, but they measure two very different aspects of network performance.

Let's use an analogy. Think of your network connection as a highway.

- Bandwidth is the number of lanes. More lanes mean more cars (data) can travel at the same time.

- Latency is the speed limit. It dictates how fast a single car can get from point A to point B.

Adding more lanes (increasing bandwidth) is a huge help if your problem is traffic jams (congestion). But it won't change the speed limit. If the physical distance is the bottleneck, all the bandwidth in the world won't make the data travel any faster. This is precisely why things like fiber optic connections and local caching are so critical for tackling latency head-on.

How Does Wi-Fi 6 Affect Latency?

Wi-Fi 6 (also known as 802.11ax) makes a massive difference, especially in crowded, multi-tenant environments where you have dozens of devices competing for airtime. The secret sauce is a feature called OFDMA (Orthogonal Frequency-Division Multiple Access).

In older Wi-Fi generations, an access point could essentially only "talk" to one device at a time, making everyone else wait their turn. OFDMA changes the game by allowing a single transmission from the AP to carry data for multiple devices at once.

Imagine a delivery truck that can drop off packages at several houses on the same trip instead of returning to the depot after each one. It dramatically cuts down on wait times and contention, which directly translates to lower latency for everyone. For a hotel lobby, apartment complex, or senior living community, upgrading to Wi-Fi 6 is one of the most impactful moves you can make.

At Clouddle Inc, we design, install, and manage high-performance networks built to eliminate latency issues in demanding commercial environments. Our Network-as-a-Service model provides the latest technology with zero upfront cost, ensuring your property is always ahead of user expectations. Learn how we can build a faster, more reliable network for you at https://www.clouddle.com.